Develop and deploy devices like never before

Qbee enables a modern development and rollout workflow through remote access.

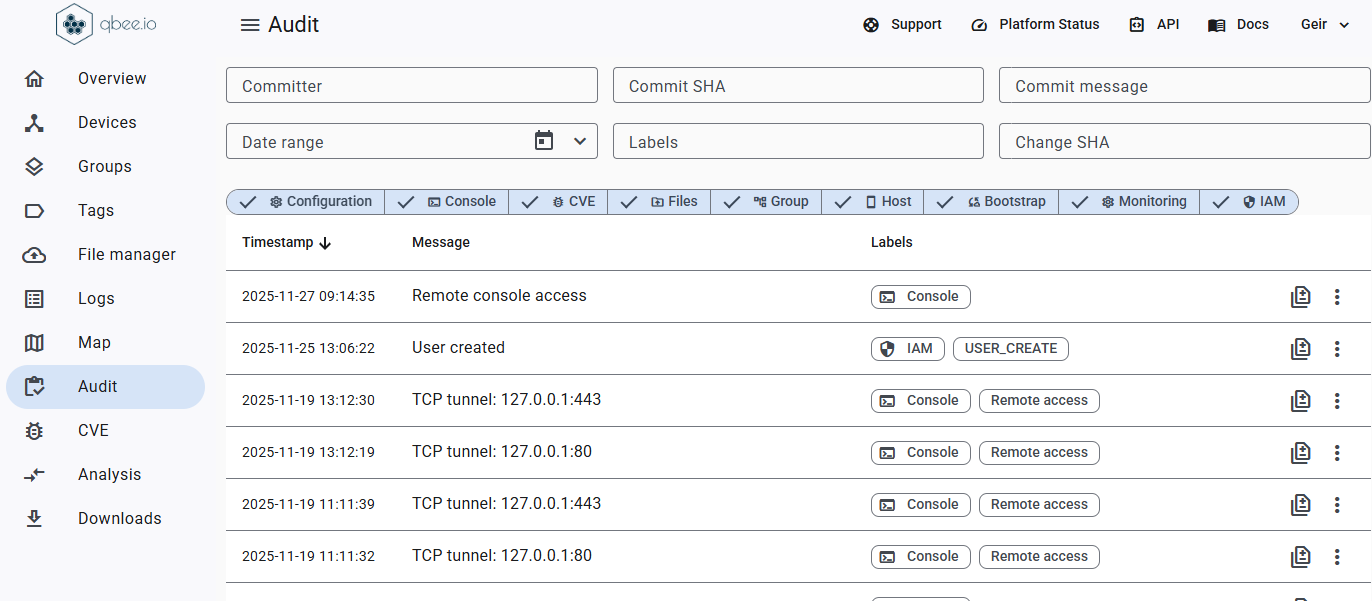

With over the air updates, DevOps can manage devices with less risk and without the need for on-site technicians.

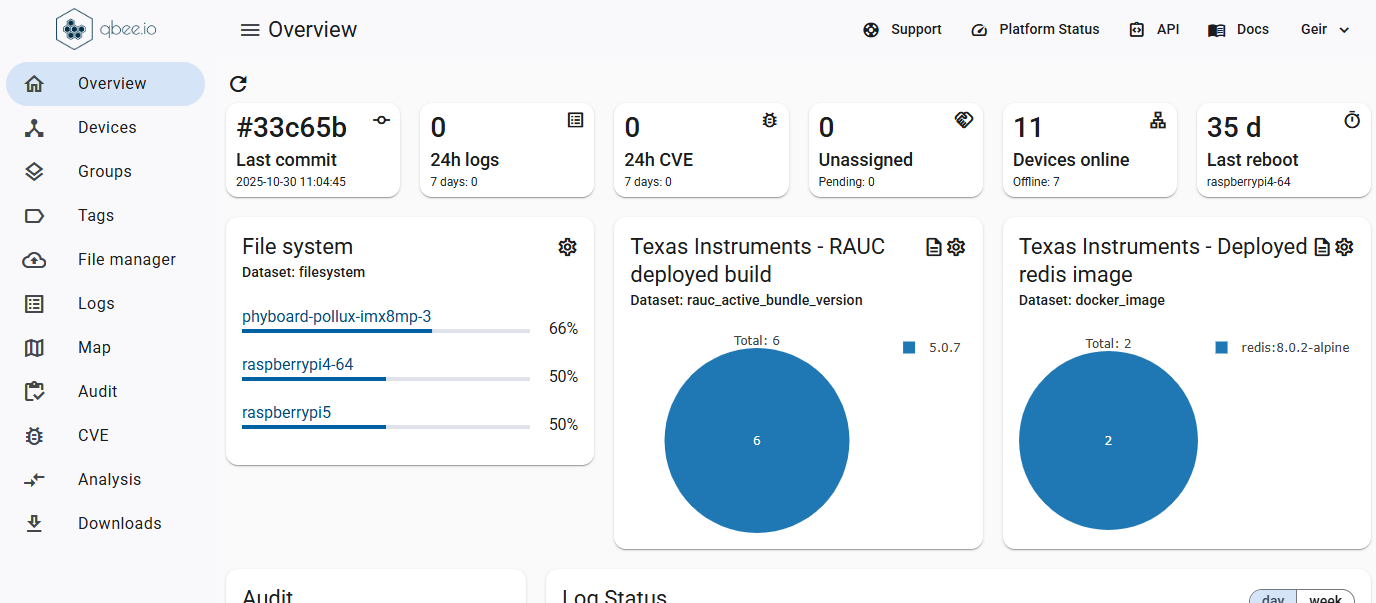

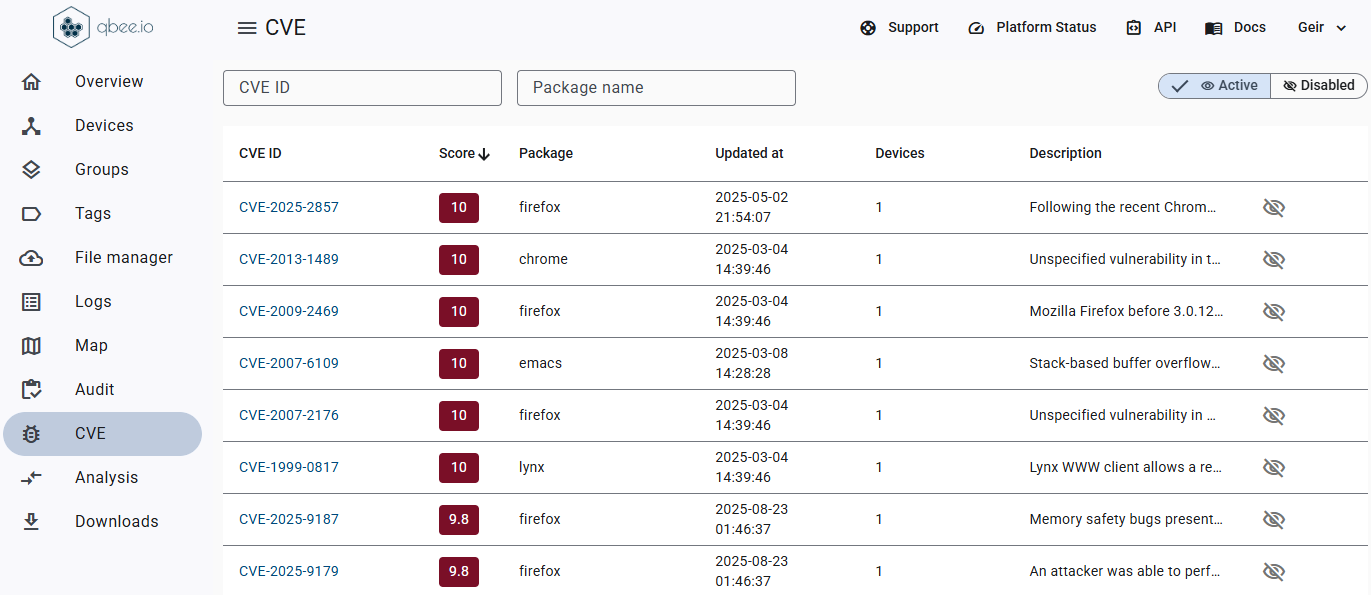

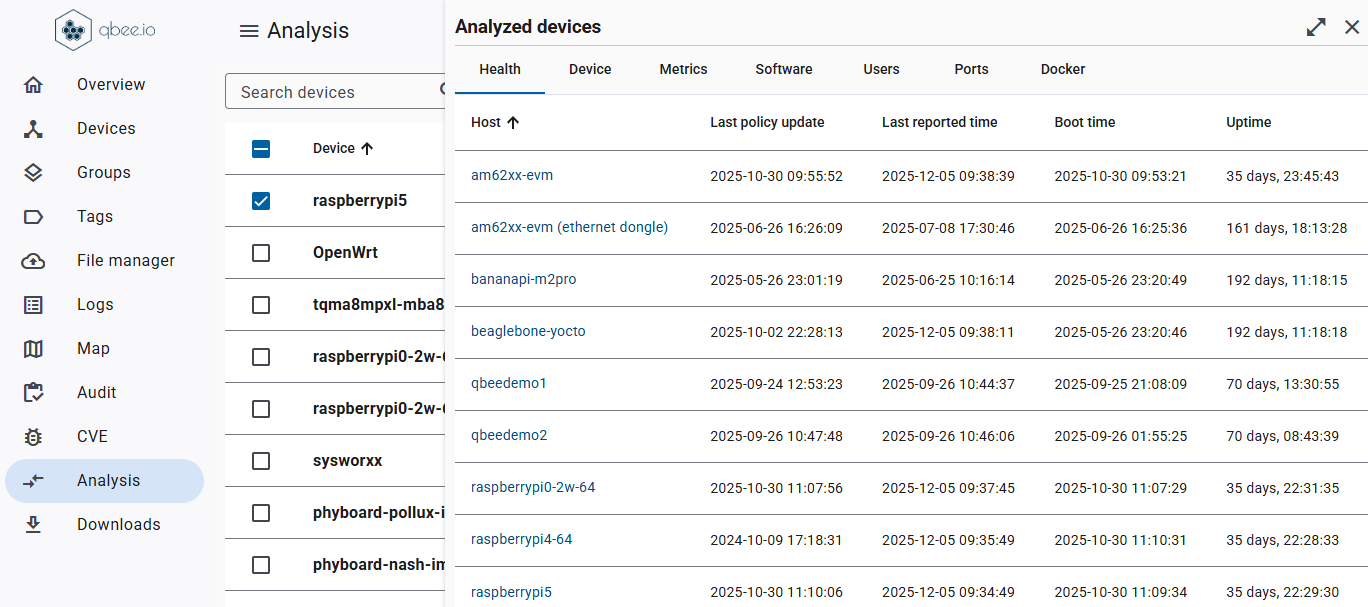

The result is faster innovation, higher quality, and clear visibility into what is running where, at all times.

.svg)

.svg)

.svg)

.svg)

.avif)

.avif)